This is another article in my continuing commentary on market access and politics. The focus in this case is to look more closely at behaviours of people involved from government, industry and healthcare. Occupying these roles are people who bring their own psychological perspective to how they apply their knowledge and skills to their work. If you’re familiar with Kahneman’s System 1 and System 2, then you’ll know where this article is going.

We are learning all the time about how we, as frail humans, make decisions. Many will know of ‘group think’ a type of behaviour where dissent is suppressed and which often leads to poor consequences – history is littered with examples. More recently, the benefits of research into the psychological basis of decision making has produced a deeper understanding. It is almost common to speak of ‘behavioural economics’ in the context of work and of ‘heuristics’ as a way of describing the mental shortcuts.

Let’s put some of that into the context of decision making about medicines. This is, of course, not to ignore the many other key decisions in the broader healthcare/life sciences sector, including how to set research priorities, where to put scarce research money or how to improve patient compliance with treatment.

What I’ll do in this short article is address decision making in payer decision making whether to adopt and reimburse a medicine. I suspect what springs to mind here is health technology assessment methodologies, reference pricing and other structured processes. Indeed, the adoption of HTA in various forms has certainly had an impact on the medicines market as it the most systematic approach to grapple the formal assessment of a medicine or device. HTA has been slow, though, to incorporate value judgements, subjective information, because HTA has been driven quantitatively by people who believe that for HTA to be objective, is must rest on quantification. This has meant that decision processes scrupulously have had to avoid ethical or social considerations; there is legitimate criticism of this, too, as in the end, the quantitative HTA analysis rests on socially and ethically relevant considerations. However, many in HTA believe that value judgements compromise the scientific objectivity of the work. [Hofmann]

Regulatory decision logic and payer decision logic are increasingly adopting similar methodologies from each other. Regulators have focused on quality, safety and risk, while payers have focused on HTA, budget impact etc. The emerging shared focus comprises relative efficacy and effectiveness assessment (as these subsume many of the other factors anyway). Methodologically, the regulatory fixation with RCTs is now just one way, with the addition of observational studies, meta-analysis and even the possibilities opening up from big data and artificial intelligence (computational/predictive analytics).

With the convergence of how they view their work (focus) with how they do their work (methods), it is more likely they will share common perceptions and bias in how they do what they do. As an example, a recent paper [Steenkamer] identified shared and divergent views on population health management of various stakeholders in the Dutch healthcare system. The paper finds that people will change their reasoning or behaviour as resources and opportunities change and which will influence the outcomes. That is why it is important to pay attention to payers’ priorities and what constrains or enables them.

Decision making and priority setting in this way involves substantial quantitative analysis and confidence in the methods used. More likely, most decisions are ‘good enough’ – this is called satisficing – as it always possible to spend more mental energy looking for the optimal solution but this has other consequences such as time, and money. However, this can produce over confidence in the results of that analysis, indeed be misleading, so that what influences decisions may be an approach that risks knowing the price of everything but the ‘value’ of nothing. This is called decoupling, and could lead to decisions being preferred that flow from data, as a proxy for objectivity and can be used to discount or ignore criticism, e.g. lacking empirical basis, just your opinion, etc. Management speak is full of jargon which in one way or other is designed to suppress dissent. It is worth noting that satisficing is one way to lower the cognitive load (the work of thinking) of avoiding more information that would make the decision making more complex (or indeed ‘wicked’).

If we look at the underlying logic of reference pricing, you’ll know that it is used to anchor quantitative models, but this becomes a possible source of error in that the anchoring heuristic limits the ability to interpret other pricing models. This type of international benchmarking works on the assumption that others might know better and bestows too much knowledge to a basket of countries, the decision makers for which are similarly hopeful that others know better. Thinking this way is called the availability bias, in that it is easier to use available data (from other countries). We also have confirmation bias as knowing what others have paid makes our own decisions easier to accept.

I should add that payers are influenced by hyperbolic discounting, which means they are more likely to understand an immediate gain. What this means for market access is that sometimes a bird in the hand is worth two in bush. Hyperbolic discounting, though, can be a trap for industry, too, as a company may discount (the bird) rather than offer a more complex market access arrangement (the bush) because of short-term performance targets. You decide.

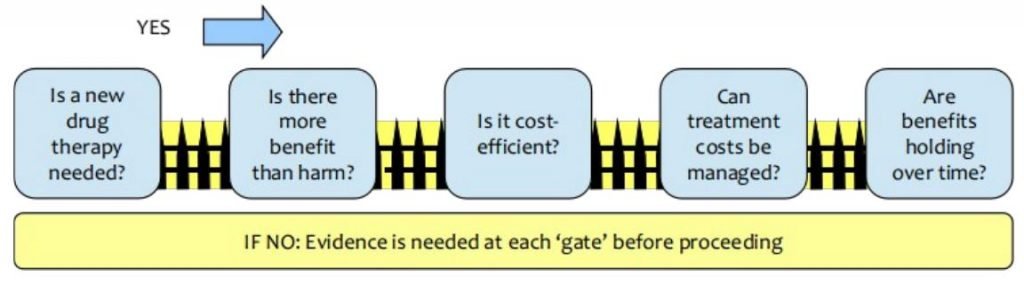

All this does need to be useful and so what I’ve done is put this into a workable framework. I use five (5) questions that frame payer decision making. I developed this model some years ago, using the measurement iterative loop [Tugwell] as the basis, as I wanted to understand the primary determinants of payer decision making.

The result is ‘gated process’ as shown in this diagram.

You work through the questions in order, and you want a YES answer for each. If you get a NO for a question you stop at that point as you cannot proceed further until you convert this NO into a YES. This conversion requires new evidence to change the decision maker’s response to YES. Jumping the gates is in conflict with the basis of the model itself as it has an impact on the order decisions are made, the appropriateness of the evidence on offer and importantly, reducing the complexity itself of the decision (it is chunked) thereby facilitating comprehension.

The questions are framed for a new medicine coming to market.

Question 1: Is a new therapy needed?

Why should a payer change if they already have choices? A new product introduces unfamiliarity to the decision makers, perhaps novel mechanism of action.

Question 2: Is there more benefit than harm?

Is there an increase in risk? We see fear of the unknown for instance.

Question 3: Is it cost-effective?

There are two quantitative measures: measures of costs and measures of effectiveness. There may be evidence to measure effectiveness. But what do you know about cost drivers?

Question 4: Can treatment costs be managed?

Probably all countries are struggling with cost control, and they would argue that controls are not working as well as they would like, so you might be faced with NO by default (automatic no). Indeed, it is generally true that sustainability of financing costs is a top priority for governments, payers, insurers and patients.

Question 5: Are benefits holding over time?

While making future claims is difficult, the benefits of treatment into the future are becoming an important quantitative analysis in pricing, to consider a baseline period of years over which a medicine’s costs and benefits will be formally assessed.

I’d suggest paying close attention to the Triple Aim [Berwick] as a way to frame your thinking when using this gated process. For starters, I would advocate conducting an ‘impact assessment’ here to determine the answers to the Triple Aim objectives; it can also reveal new value drivers. This involves time and money, but may in the end be less expensive than failure or a weakened market access strategy (pursuing a failed strategy is called the disposition effect).

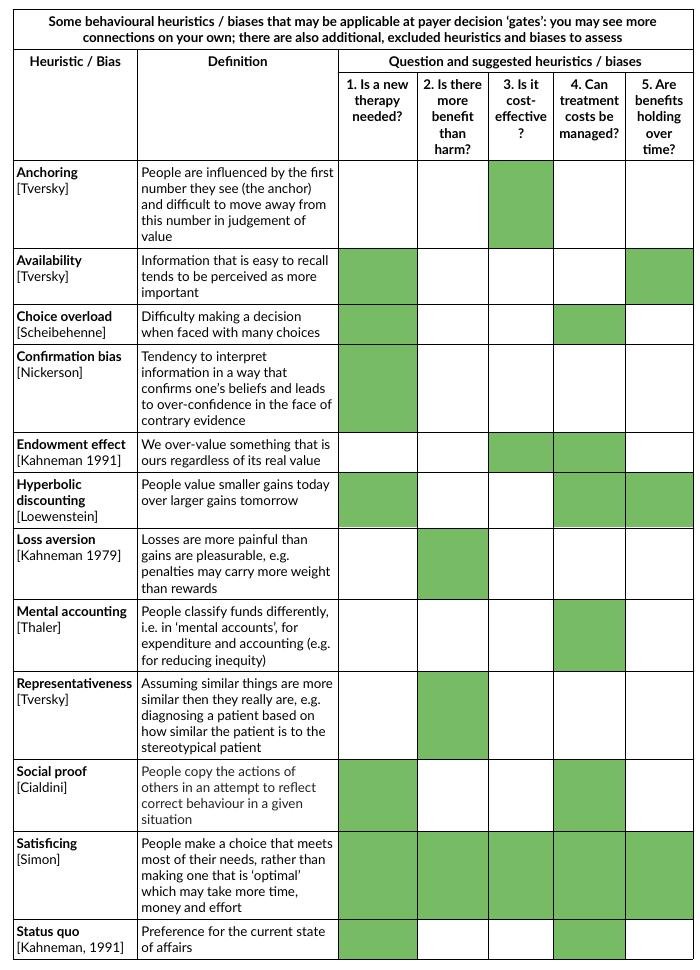

I’ve summarised some applicable behavioural heuristics in this table for convenience.

As you can see heuristics can have broad applicability. The papers cited in the table are listed in References at the end of this post. This is by no means the complete story, but hopefully has shed some useful light in this area.

Putting this to work

So, may I suggest the following ways to put this psychological knowledge to work for you in dealing with the questions in this gated payer decision process:

Incentives: Use incentives that payers will value; there is no point guessing what they will value. It might help to know if your own company incentives are actually conducive to achieving the desired results. For example, what incentivises you is unlikely to incentivise payers.

Norms: Use other countries with similar challenges to demonstrate good practice. This helps lower perception of risk; there is a high risk aversion to being first to do something. But if you have solved a problem in a country that a payer uses in their reference basket, this can contextualise the benefit for the cost.

Defaults: Understand the ‘pre-set’ or starting position of payers as this is their “go with the flow” preferences; this could be scepticism, or an automatic ‘no’. Also, know where you draw the line; pricing corridors are a crude tool for assessing affordability and payer attitudes and price thresholds; they may create a false sense of the evidence.

Salience: Novelty needs to be linked to the payers’ experience, otherwise they won’t recognise or value it. You should be very concerned with the language used to convey value. Many people may not recognise as novel what you think is the best thing since sliced bread.

Priming: Choose influencing language carefully as it is possible to prime an immediate negative response as much as it is possible to prime longer term attentiveness. That means ensuring that your communication strategy is well-formed.

Affect: Note relevant emotions and what triggers these responses. It pays to know when you are pushing at a closed door to avoid eliciting frustration. A key behavioural red flag is ‘irritation’.

Commitments: Payers need to be broadly consistent with public promises, strategies; creating circumstances that cause conflict here will fail.

Ego: Payers need to know/feel that they are making the right decisions at a personal level (so they can be proud of their choices, for instance). I’m sure you know the feeling.

Want to know more?

Gilowich T, Griffin D, Kahneman D (eds.). Heuristics and biases: The psychology of intuitive judgement. Cambridge: CUP, 2002.

References in this article

Berwick DM, Nolan TW, Whittington J. The triple aim: Care, health, and cost. Health Aff. 2008;27(3):759-769.

Caro JJ, Brazier JE, Karnon J, et al. Determining value in health technology assessment: Stay the course or tack away? PharmacoEconomics. 2019;37(3):293-299.

Cialdini R. Influence: Science and Practice, 5th ed, Boston: Pearson, 2009.

Eichler HG et al. Relative efficacy of drugs: an emerging issue between regulatory agencies and third-party payers. Nat Drug Discov. 2010(9):277-291.

Frederick S, Loewenstein G, O’Donoghue T. Time discounting and time preference: A critical review. J Econ Lit. 2002;40(2):351-401.

Hofmann B, Cleemput I, Bond K, et al. Revealing and acknowledging value judgements in health technology assessment. Int J Technol Assess Health Care. 2014;30(6):579-586.

Kahneman D, Knetsch JL, Thaler RH. Anomalies: The endowment effect, loss aversion, and status quo bias. J Econ Perspect. 1991;5(1):193-206.

Kahneman D, Tversky A. Prospect theory: An analysis of decision under risk. Econometrica. 1979;47(2):263-292.

Nickerson RS. Confirmation bias: A ubiquitous phenomenon in many guises. Rev of Gen Psychol. 1998;2(2):175–220.

Scheibehenne B, Todd B, Greifeneder R. Can there be too many options? A meta-analytic review of choice overload. J Consumer Res. 2010;37(3):409-425.

Simon HA. Rational choice and the structure of the environment. Psychol Rev. 1956;63 (2):129–138.

Steenkamer BM, Drewes HW, van Vooren N, Baan CA, van Oers H, Putters K. How executives’ expectations and experiences shape population health management strategies. BMC Health Serv Res. 2019;19(1):757.

Thaler RH. Misbehaving: The making of behavioral economics. New York: Norton, 2015.

Tromp N, Baltussen R. Mapping of multiple criteria for priority setting for health interventions: An aid for decision makers. BMC Health Serv Res. 2012;12:454.

Tugwell P, Bennett KJ, Sackett DL, Haynes RB, The measurement iterative loop: A framework for the critical appraisal of need, benefits and costs of health interventions. J Chronic Dis. 1985;38(4):339-51.

Tversky A, Kahneman D. Judgment under uncertainty: Heuristics and biases. Science. 1974;185(4157):1124-1131.